Kubernetes (often abbreviated as K8s) is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. Originally developed by Google and now maintained by the Cloud Native Computing Foundation (CNCF), Kubernetes helps manage clusters of containers (such as those created with Docker) across a network of machines, making it easier to manage workloads in a cloud-native environment.

Kubernetes is a portable, extensible, open source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation. It has a large, rapidly growing ecosystem. Kubernetes services, support, and tools are widely available.

👉 Why you need Kubernetes and what it can do

Containers are a good way to bundle and run your applications. In a production environment, you need to manage the containers that run the applications and ensure that there is no downtime. For example, if a container goes down, another container needs to start. Wouldn’t it be easier if this behavior was handled by a system?

That’s how Kubernetes comes to the rescue! Kubernetes provides you with a framework to run distributed systems resiliently. It takes care of scaling and failover for your application, provides deployment patterns, and more. For example: Kubernetes can easily manage a canary deployment for your system.

👉 Key Features of Kubernetes:

Service discovery and load balancing: Kubernetes can expose a container using the DNS name or using their own IP address. If traffic to a container is high, Kubernetes is able to load balance and distribute the network traffic so that the deployment is stable.

Storage orchestration: Kubernetes allows you to automatically mount a storage system of your choice, such as local storages, public cloud providers, and more.

Automated rollouts and rollbacks: You can describe the desired state for your deployed containers using Kubernetes, and it can change the actual state to the desired state at a controlled rate. For example, you can automate Kubernetes to create new containers for your deployment, remove existing containers and adopt all their resources to the new container.

Automatic bin packing: You provide Kubernetes with a cluster of nodes that it can use to run containerized tasks. You tell Kubernetes how much CPU and memory (RAM) each container needs. Kubernetes can fit containers onto your nodes to make the best use of your resources.

Self-healing: Kubernetes restarts containers that fail, replaces containers, kills containers that don’t respond to your user-defined health check, and doesn’t advertise them to clients until they are ready to serve.

Secret and configuration management: Kubernetes lets you store and manage sensitive information, such as passwords, OAuth tokens, and SSH keys. You can deploy and update secrets and application configuration without rebuilding your container images, and without exposing secrets in your stack configuration.

Batch execution: In addition to services, Kubernetes can manage your batch and CI workloads, replacing containers that fail, if desired.

Horizontal scaling: Scale your application up and down with a simple command, with a UI, or automatically based on CPU usage.

IPv4/IPv6 dual-stack: Allocation of IPv4 and IPv6 addresses to Pods and Services.

Designed for extensibility: Add features to your Kubernetes cluster without changing upstream source code.

👉 Common Use Cases:

Microservices architecture: Kubernetes is ideal for running microservices, where applications are split into smaller, independently deployable services.

Cloud-Native Applications: It allows applications to be portable and can be run across different cloud environments or even on-premises data centers.

CI/CD Pipelines: Kubernetes automates the orchestration of builds, tests, and deployments in Continuous Integration and Continuous Delivery (CI/CD) workflows.

👉 History of Kubernetes

Understanding the historical context behind Kubernetes helps to clarify why it became such a critical tool for modern cloud-native infrastructure.

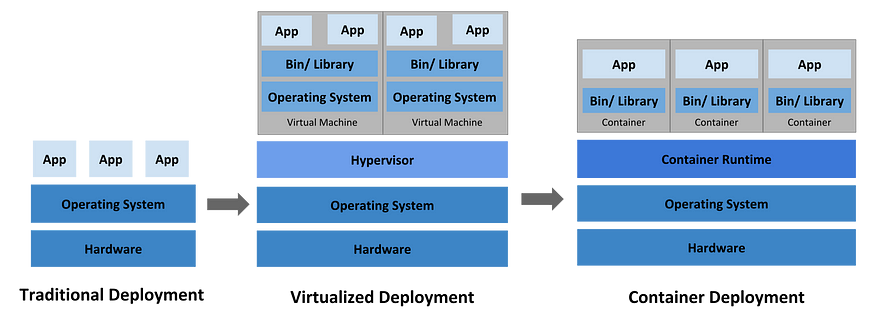

Traditional deployment era:

Early on, organizations ran applications on physical servers. There was no way to define resource boundaries for applications in a physical server, and this caused resource allocation issues. For example, if multiple applications run on a physical server, there can be instances where one application would take up most of the resources, and as a result, the other applications would underperform. A solution for this would be to run each application on a different physical server. But this did not scale as resources were underutilized, and it was expensive for organizations to maintain many physical servers.

Virtualized deployment era:

As a solution, virtualization was introduced. It allows you to run multiple Virtual Machines (VMs) on a single physical server’s CPU. Virtualization allows applications to be isolated between VMs and provides a level of security as the information of one application cannot be freely accessed by another application.

Virtualization allows better utilization of resources in a physical server and allows better scalability because an application can be added or updated easily, reduces hardware costs, and much more. With virtualization you can present a set of physical resources as a cluster of disposable virtual machines.

Each VM is a full machine running all the components, including its own operating system, on top of the virtualized hardware.

Container deployment era:

Containers are similar to VMs, but they have relaxed isolation properties to share the Operating System (OS) among the applications. Therefore, containers are considered lightweight. Similar to a VM, a container has its own filesystem, share of CPU, memory, process space, and more. As they are decoupled from the underlying infrastructure, they are portable across clouds and OS distributions.

Docker Launch (March 2013): The release of Docker popularized the use of containers by making it easier to package, deploy, and run applications in isolated environments. Docker provided a consistent interface for developers to build applications that could run anywhere, giving rise to containerized applications.

Containers have become popular because they provide extra benefits, such as:

Agile application creation and deployment: increased ease and efficiency of container image creation compared to VM image use.

Continuous development, integration, and deployment: provides for reliable and frequent container image build and deployment with quick and efficient rollbacks (due to image immutability).

Dev and Ops separation of concerns: create application container images at build/release time rather than deployment time, thereby decoupling applications from infrastructure.

Observability: not only surfaces OS-level information and metrics, but also application health and other signals.

Environmental consistency across development, testing, and production: runs the same on a laptop as it does in the cloud.

Cloud and OS distribution portability: runs on Ubuntu, RHEL, CoreOS, on-premises, on major public clouds, and anywhere else.

Application-centric management: raises the level of abstraction from running an OS on virtual hardware to running an application on an OS using logical resources.

Loosely coupled, distributed, elastic, liberated micro-services: applications are broken into smaller, independent pieces and can be deployed and managed dynamically — not a monolithic stack running on one big single-purpose machine.

Resource isolation: predictable application performance.

Resource utilization: high efficiency and density.

Need for Orchestration:

As developers began adopting Docker, it became clear that managing multiple containers across clusters of machines was a complex task. Scheduling, scaling, networking, and resource management were manual processes that didn’t scale well.

Birth of Kubernetes (2014):

Kubernetes Project Announcement (June 2014): Recognizing the need for a solution to manage containerized applications at scale, Google engineers started the Kubernetes project. It was an open-source version inspired by the principles of Borg and Omega, designed to orchestrate containers across distributed systems. Kubernetes was released under an open-source license and developed publicly from the beginning.

Kubernetes 0.1 Release (July 2014): The first public release of Kubernetes, 0.1, came just a month after its announcement. This early version provided basic container orchestration features, such as scheduling, networking, and service discovery.

Kubernetes Joins the Cloud Native Computing Foundation (CNCF, 2015):

Formation of the CNCF (December 2015): The Cloud Native Computing Foundation (CNCF) was established under the Linux Foundation to promote the growth of cloud-native technologies, with Kubernetes as its first project. CNCF provided Kubernetes with a neutral governance model and fostered collaboration among the growing Kubernetes community.